Indiana Needs to Lead the Nation in Digital 4.0

The industrial revolutions have been powering transformational changes in human society for centuries. In fact, we are on the fourth industrial revolution powered by artificial intelligence, commonly known as the Industry 4.0. Each revolution was marked by clear and identifiable technological advances that resulted in higher outputs in goods and services and in workforce productivity:

First Industrial Revolution | Industry 1.0

Mid 18th to mid 19th century – transition from hand production to machines through the use of steam and water powers.

Second Industrial Revolution | Industry 2.0

Late 19th century to early 20th century – increase in industrial transportation, connectivity and production at scale through railroads, electricity, and telegraph networks.

Third Industrial Revolution | Industry 3.0

Mid 20th century to early 21st century – invention and wide adoption of computers and computer networks that resulted in a digital revolution.

Fourth Industrial Revolution | Industry 4.0

Early 21st century to now – growth in data generation, exchanges and automation in manufacturing technologies and processes, in essence digital transformation in industry

What distinguishes each industrial revolution is not only the increasingly compressed timeline in technology adoption, but more importantly, the significant increase in productivity. The arrival of the digital age is no different.

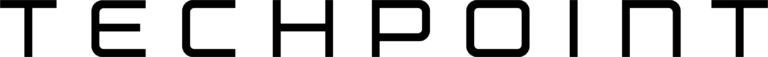

According to the Organization for Economic Co-operation and Development (OECD), a long-standing international economic development nonprofit, a vast literature has documented the existence of positive links between the adoption of digital technologies and firm and industry-level productivity. For example, OECD estimates suggest that a 10% increase in the share of firms using high-speed broadband internet (cloud computing) at the industry level is associated with a 1.4% increase in multi-factor productivity for the average firm in the industry after 1 year, and 3.9% after 3 years across EU countries (Gal et al., 2019).

The Digital Age.

In fact, the digital revolution has made such a broad impact on our society, not just in industrial and manufacturing industries, that it’s important to outline Digital 4.0.

Digital 1.0

1930s-1980s – the start of digital: transition from analog (mechanical) to digital machines starting in World War II to the launch of personal computer (PC) by IBM in 1981.

Digital 2.0

1980s-1990s – digital connectivity: wide adoption of internet in its true sense of digital connectivity (without the need to transport people or information stored on computer tapes to computer site). It culminated in the 2000s Dot-com boom and bubble bursting.

Digital 3.0

2000s-2010s – rise of data analytics: the age of digital data marked by both large increase in digital data generation and consumption as well as the computing power for processing such data. In particular, the later part of the Digital 3.0 cycle witnessed the exponential growth of digital adoption in the way we live, work and consume information.

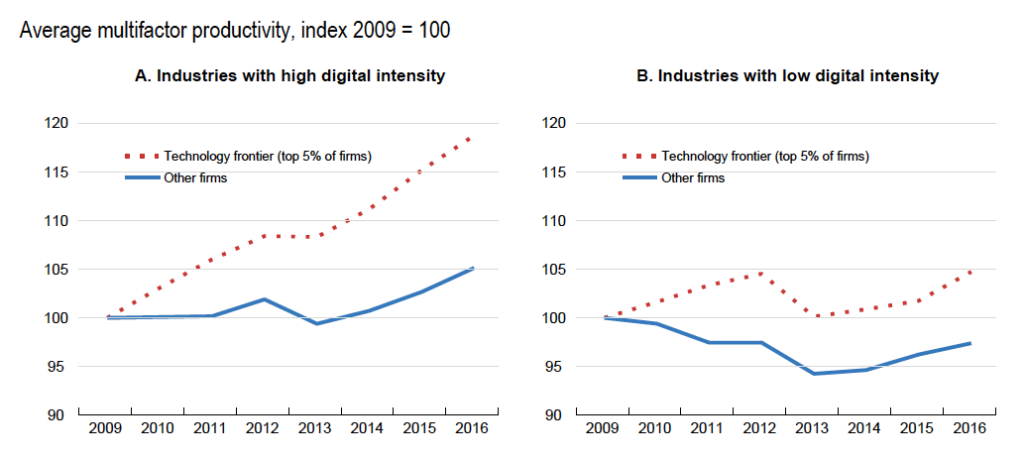

According to a recent Pitchbook article (Pitchbook: “The Post Digital Era – Setting the Stage for the Next Era of Technology Innovation”), the Great Recession ushered in an era of cheap money that fueled tech innovation and drove widespread scaling and adoption of digital technologies. When the Great Recession ended in 2009, fewer than 10% of Americans had smartphones, and the phones mostly operated on 3G networks. Today, more than 85% of Americans have smartphones, and they mostly operate on 5G networks.1 In 2009, there were roughly 4,400 Blockbuster locations, and it took more than half an hour to download a movie to a desktop computer. Today, there are no Blockbusters (apart from one novelty operation in Oregon), and you can download a movie to a mobile phone in less than a minute. In 2009, roughly half of Americans still had newspapers delivered to their homes, and there were less than a billion social media accounts. Today, less than a quarter of Americans have newspapers delivered to their homes, and there are more than 13 billion social media accounts.

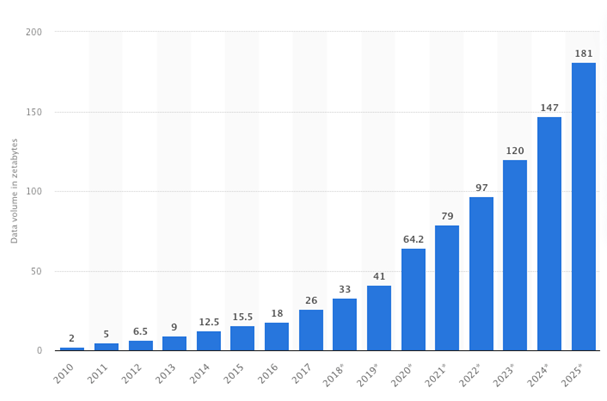

Volume of data/information created, captured, copied, and consumed worldwide from 2010 to 2020, with forecasts from 2021 to 2025 (source: https://www.statista.com/statistics/871513/worldwide-data-created/)

Digital 4.0

Late 2010s – now – era of artificial intelligence: enhanced automation and productivity increase in all industries driven not only by data analytics but also the giant leap in artificial intelligence leveraging massive amounts of data.

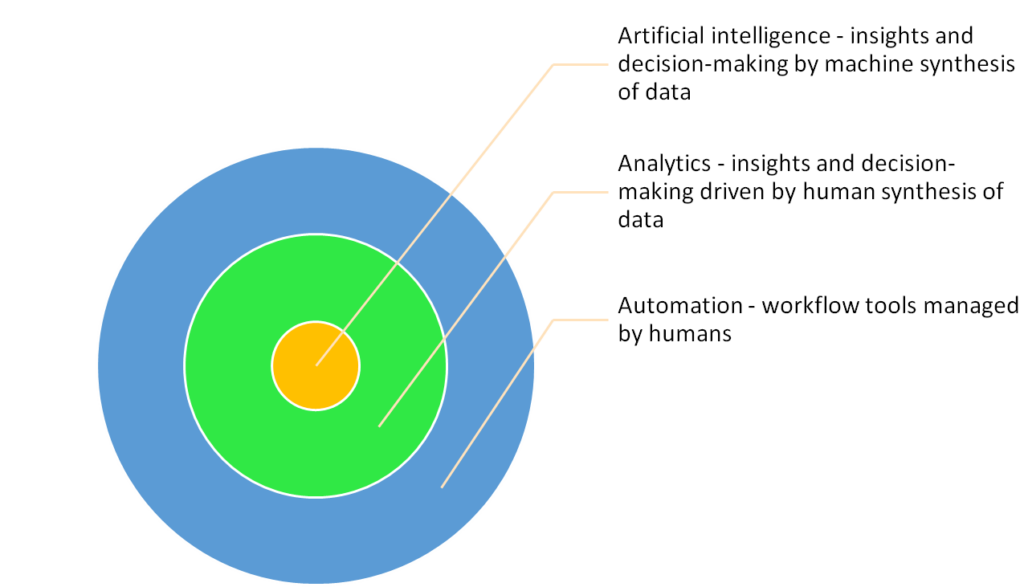

Overlaying such digital revolutions is the increased digital adoption in three As:

Digital 4.0 and Digital Adoption of Automation, Analytics, and Artificial Intelligence (AI)

If Digital 3.0 was primarily characterized by the first two As – automation and analytics, Digital 4.0 is powered by the commercial adoption of the last A – artificial intelligence.

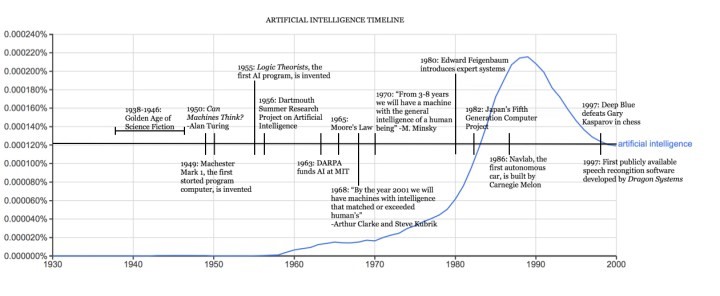

The concept of artificial intelligence as in machine that can think, dates all the way back to the 1950s when the digital machines started to experience bounces of technological advances post World War II, enabled by the machines’ ability to finally not only execute (take orders from humans) but also store commands (remember what they did). Cost prohibition computing was extremely expensive. Over time, generations of scientists and practitioners had to overcome technical and cost hurdles through continued technology maturation: cost prohibition on computers, lack of massive computing power, and sporadic government funding for advanced AI research.

Finally, technology matured to the level that would allow potential massive adoption of AI, following the Moore’s Law (thanks to the number of transistors on a microchip doubles every two years, we can expect the speed and capability of our computers to increase every two years because of this, yet we will pay less for them.)

Source: https://sitn.hms.harvard.edu/flash/2017/history-artificial-intelligence/

Timeline of AI Adoption.

From 2010s and onward, AI is working its way into consumer and enterprise products despite lack of mass recognition of the technology powering inside. Below are select examples of AI-powered products according to an article in G2 on the history of artificial intelligence (source: https://www.g2.com/articles/history-of-artificial-intelligence)

2010: ImageNet launched the ImageNet Large Scale Visual Recognition Challenge (ILSVRC), their annual AI object recognition competition.

2010: Microsoft launched Kinect for Xbox 360, the first gaming device that tracked human body movement using a 3D camera and infrared detection.

2011: Watson, a natural language question answering computer created by IBM, defeated two former Jeopardy! champions, Ken Jennings and Brad Rutter, in a televised game.

2011: Apple released Siri, a virtual assistant on Apple iOS operating systems. Siri uses a natural-language user interface to infer, observe, answer, and recommend things to its human user. It adapts to voice commands and projects an “individualized experience” per user.

2012: Jeff Dean and Andrew Ng (Google researchers) trained a large neural network of 16,000 processors to recognize images of cats (despite giving no background information) by showing it 10 million unlabeled images from YouTube videos.

2013: A research team from Carnegie Mellon University released Never Ending Image Learner (NEIL), a semantic machine learning system that could compare and analyze image relationships.

2014: Microsoft released Cortana, their version of a virtual assistant similar to Siri on iOS.

2014: Amazon created Amazon Alexa, a home assistant that developed into smart speakers that function as personal assistants.

2015-2017: Google DeepMind’s AlphaGo, a computer program that plays the board game Go, defeated various (human) champions.

2016: Google released Google Home, a smart speaker that uses AI to act as a “personal assistant” to help users remember tasks, create appointments, and search for information by voice.

2017: The Facebook Artificial Intelligence Research lab trained two “dialog agents” (chatbots) to communicate with each other in order to learn how to negotiate. However, as the chatbots conversed, they diverged from human language (programmed in English) and invented their own language to communicate with one another – exhibiting artificial intelligence to a great degree.

2018: Alibaba (Chinese tech group) language processing AI outscored human intellect at a Stanford reading and comprehension test. The Alibaba language processing scored “82.44 against 82.30 on a set of 100,000 questions” – a narrow defeat, but a defeat, nonetheless.

2018: Google developed BERT, the first “bidirectional, unsupervised language representation that can be used on a variety of natural language tasks using transfer learning.”

2018: Samsung introduced Bixby, a virtual assistant. Bixby’s functions include Voice, where the user can speak to and ask questions, recommendations, and suggestions; Vision, where Bixby’s “seeing” ability is built into the camera app and can see what the user sees (i.e. object identification, search, purchase, translation, landmark recognition); and Home, where Bixby uses app-based information to help utilize and interact with the user (e.g. weather and fitness applications.)

From 2019 on, the advancement in chatbots, virtual assistants, cybersecurity, robotics automation and autonomous vehicles is only accelerating despite some of the challenges presented by the global pandemic. The mass adoption of OpenAI’s ChatGTP from 0 to over 100 million users in less than 4 months marked yet another commercial milestone for AI in terms of scale and velocity.

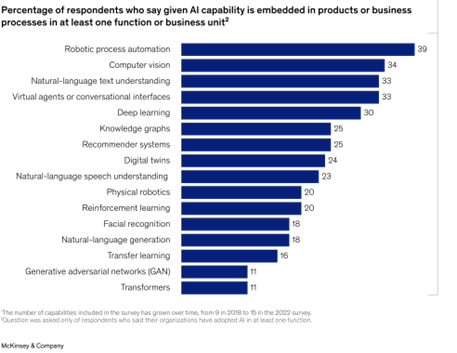

The last five years saw increased adoption of AI in enterprise applications, often times as part of a holistic digital transformation effort that seeks to reinvent how products and services are ultimately delivered. A late 2022 Mckinzey report highlighted 2.5X higher AI business adoption from 20% in 2017 to 50% in 2022, and the doubling of the average number of AI capabilities that organizations use from 1.9 in 2018 to 3.8 in 2022. (Source: https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai-in-2022-and-a-half-decade-in-review)

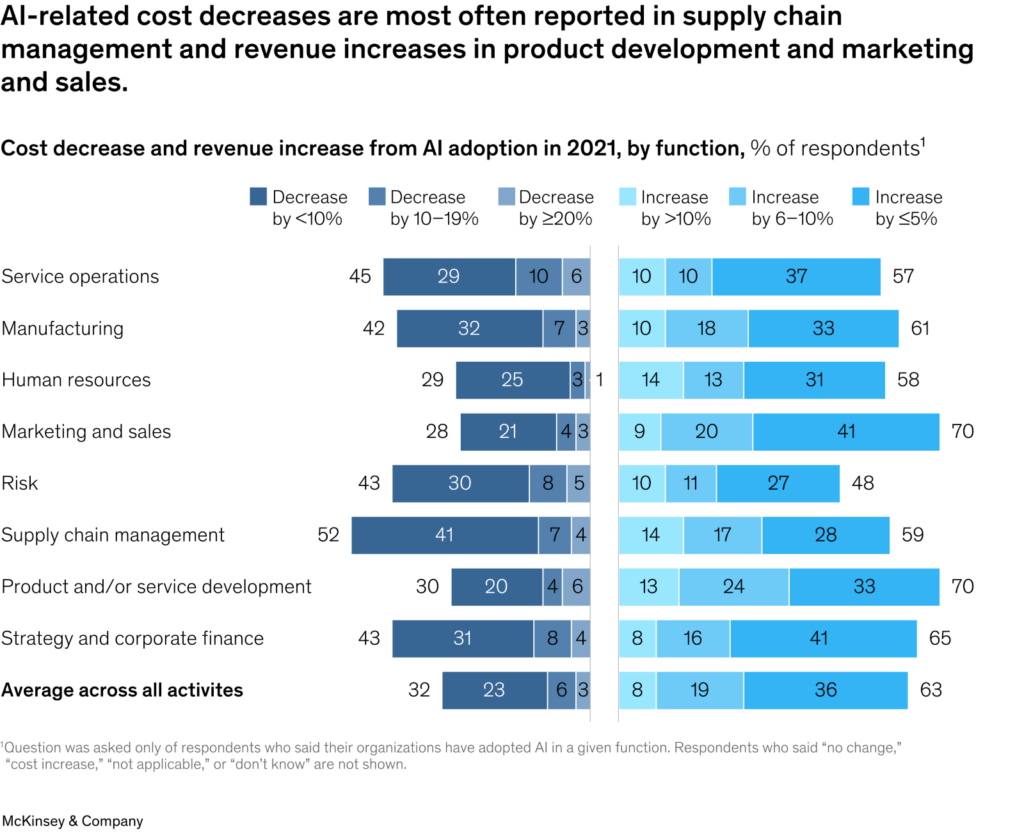

Most encouragingly, AI adoption has started to see wider budget support and impact on the business topline and bottom-line alike, further bolstering the case for continued and sustained technology investment. According to the same Mckinsey report, 40 percent of respondents at organizations using AI reported more than 5 percent of their digital budgets went to AI in 2018, compared to 52% report that level of investment in 2022. Going forward, 63 percent of respondents say they expect their organizations’ investment to increase over the next three years.

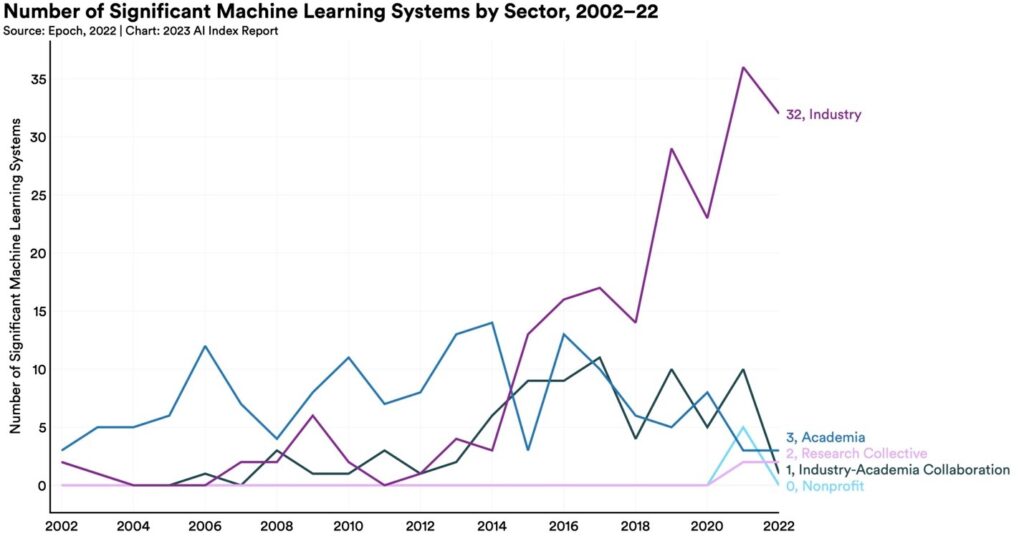

We are finally hitting a tipping point where the era of AI is driven or led by industry, not just from implementation standpoint but also research & development in data models. In 2022, there were 32 significant industry-produced machine learning models compared to just three produced by academia. Building state-of-the-art AI systems increasingly requires large amounts of data, computer power, and money—resources that industry actors inherently possess in greater amounts compared to nonprofits and academia. (Source: https://aiindex.stanford.edu/report/). Such new technology invention activities within industries would in turn further fuel AI adoption.

Digital Transformation into Digital 4.0 – An Imperative for Indiana.

Tech is now becoming the key economic growth driver across sectors and around the globe, in terms of productivity improvement through digital transformation, cutting edge tech hub initiatives and venture capital investments.

Tech now contributes over $50 billion to our state’s GDP and employs 118K people. Nearly three quarters of our tech workforce is employed in non-core-tech sectors such as ag, manufacturing, logistics and healthcare. Digital innovation also continues to dominate startup and venture activities in Indiana and attracts the majority of venture capital investment dollars. To be a top 10 national leader in tech is no longer a nice-to-have but an imperative. We can’t succeed in that endeavor without tech talent and digital innovation.

According to a 2021 Brookings study, Slow technology adoption keeps productivity and wages low. Industry and firm productivity growth—the efficiency by which enterprises convert inputs into outputs— is critical to prosperity, but it has been declining in Indiana. Economy-wide, efficiency has slumped to levels around 15% below the national level. Especially concerning are slippages in the performance of the state’s advanced-industry sector—a collection of 46 R&D- and STEM-worker-intensive industries in Indiana highlighted by Brookings and ranging from biopharma manufacturing and medical devices to automotive, R&D consulting, and technology. Between 2007 and 2019, advanced industry productivity in Indiana grew at a paltry 0.4% annually, from $285,100 to $298,300 per worker. By comparison, real output per worker in advanced industries across the nation grew 2.7% a year during this period, reaching $375,000 per worker in 2019—implying a productivity gap of nearly 20% between the state and the nation. This represents a fall from the state’s slight advanced-industry sector productivity advantage in 2007 of 5%.

Hence, Digital 4.0 is not only an innovation imperative but also a prerequisite for our state to remain economically competitive. The foundational pieces to Digital 4.0 include expansion in all four areas below.

- Digital connectivity

- Digital transformation in midsized companies and large enterprises

- Digital innovation and entrepreneurship

- Digital skills alignment from K12 to tech employers across sectors.

As a mission-driven organization for the industry, we have worked with partners in public, private and academic sectors towards building holistic, collaborative and aligned strategies for each. Time to power our state and industries forward!